Rigorous Work with Fallible AI

Because the real hallucination is expecting perfection

It’s understandable to be suspicious of “vibe coding” in corporate settings, especially in high-stakes software and data science. Each hallucinated or confidently wrong answer chips away at trust.

But “Is the AI accurate?” is the wrong question. A more useful one is: “Have I built a system that produces trustworthy outputs from fallible components?”

Tl;dr

Reliability isn’t a model property - it’s a system property built around fallible components. Just like it always has been.

Treat repetition as a signal: encode it - starting with small misses, then graduating into workflows and tools.

You’ll never get 100% adherence, so where you encode matters: target the right instruction for the right time with minimal distraction.

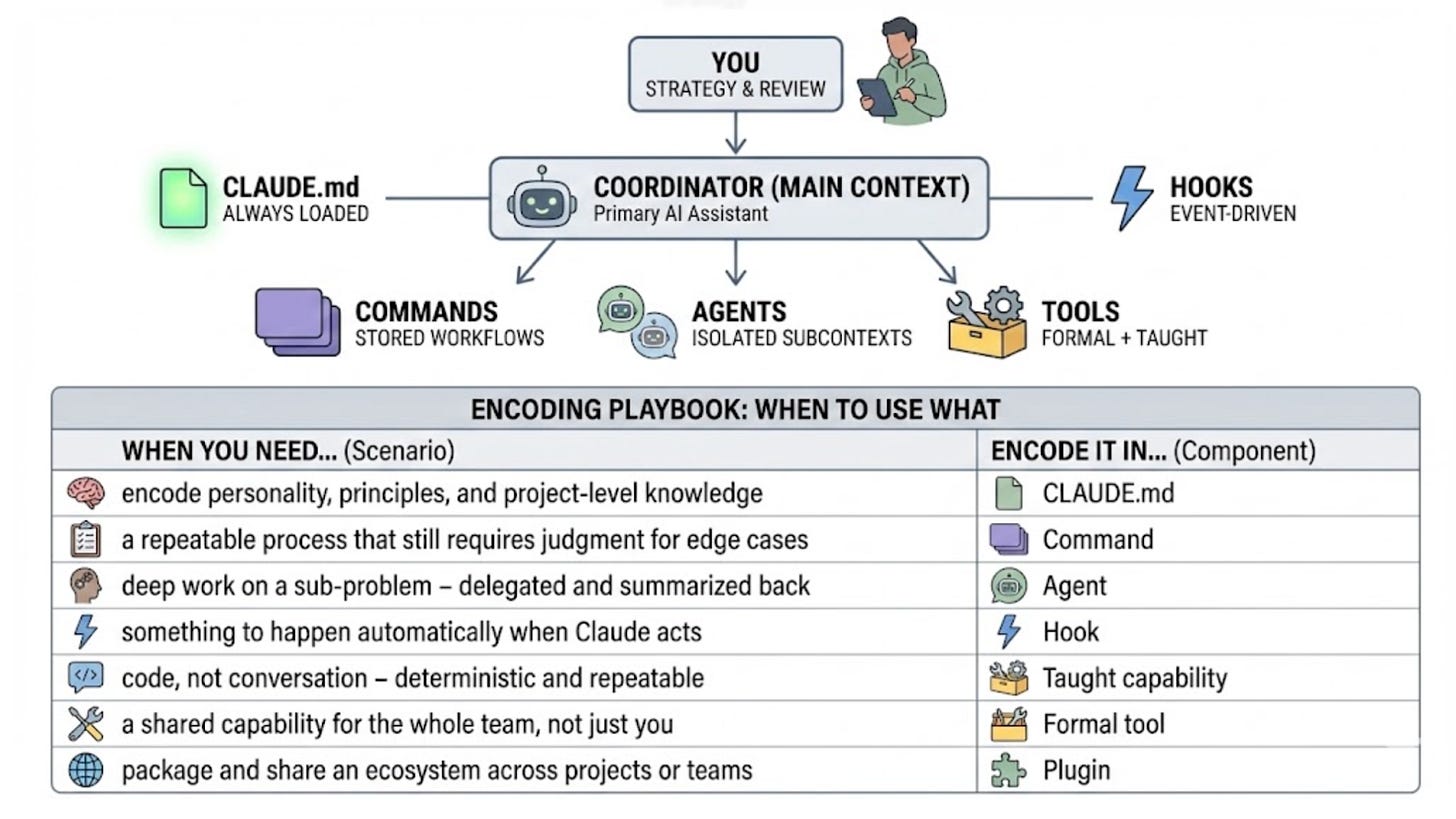

Use a layered toolkit (CLAUDE.md, commands, agents, hooks, tools) so your training sticks.

“Infallibly correct” has never been the bar for humans or software. Data centers assume hardware will fail; engineering teams assume people will ship bugs and occasionally nuke production. Reliability comes from designing systems around fallible parts. Treating AI the same way - something that is both powerful and failure-prone, and that deserves a real investment in tooling and training - has completely changed how I work.

Over the last year of pushing Claude Code to its limits, I’ve hit every classic failure: confident fabrications, fallbacks that hide real errors, and imaginary databases springing up when connections fail. At the same time, my analysis work has become deeper, faster, more rigorous, and easier for other people to replicate and build on. Not because the AI is magically correct, but because it’s extremely capable at the parts that bottleneck humans: writing and refactoring code, building full-stack glue, and exploring more variations than I could reasonably do by hand.

I’ve stopped hand coding analyses in one-off notebooks, and moved towards building shared AI tooling, pipelines, and reusable workflows to enable higher quality analyses at scale for myself and my team - far more than I’d previously ever dreamed of being “in scope” for a single data scientist.

This is my personal playbook for that shift: how I see the human role changing, how to continuously up-level your work with DRY for AI, and how to architect a practical ecosystem in Claude Code to make rigorous work with fallible models not just possible, but normal.

Your Job Has Changed

The ceiling of what an AI coding assistant can do is not set by the model’s raw “IQ,” but by the tools, context, and training you surround it with. Most of the frustrating “out of the box” quirks you’ve hit aren’t permanent traits of AI - they’re missing scaffolding: principles, knowledge of your stack, and access to the systems you rely on.

You wouldn’t hire the smartest engineer you’ve ever met, hand them a laptop on day one, and say: “Here’s an obscure, high-stakes problem in a codebase you’ve never seen. Figure it out.” You’d give them docs, code labs, a clear scope, and start with a small area of ownership, increasing responsibilities over time. Your AI deserves the same grace.

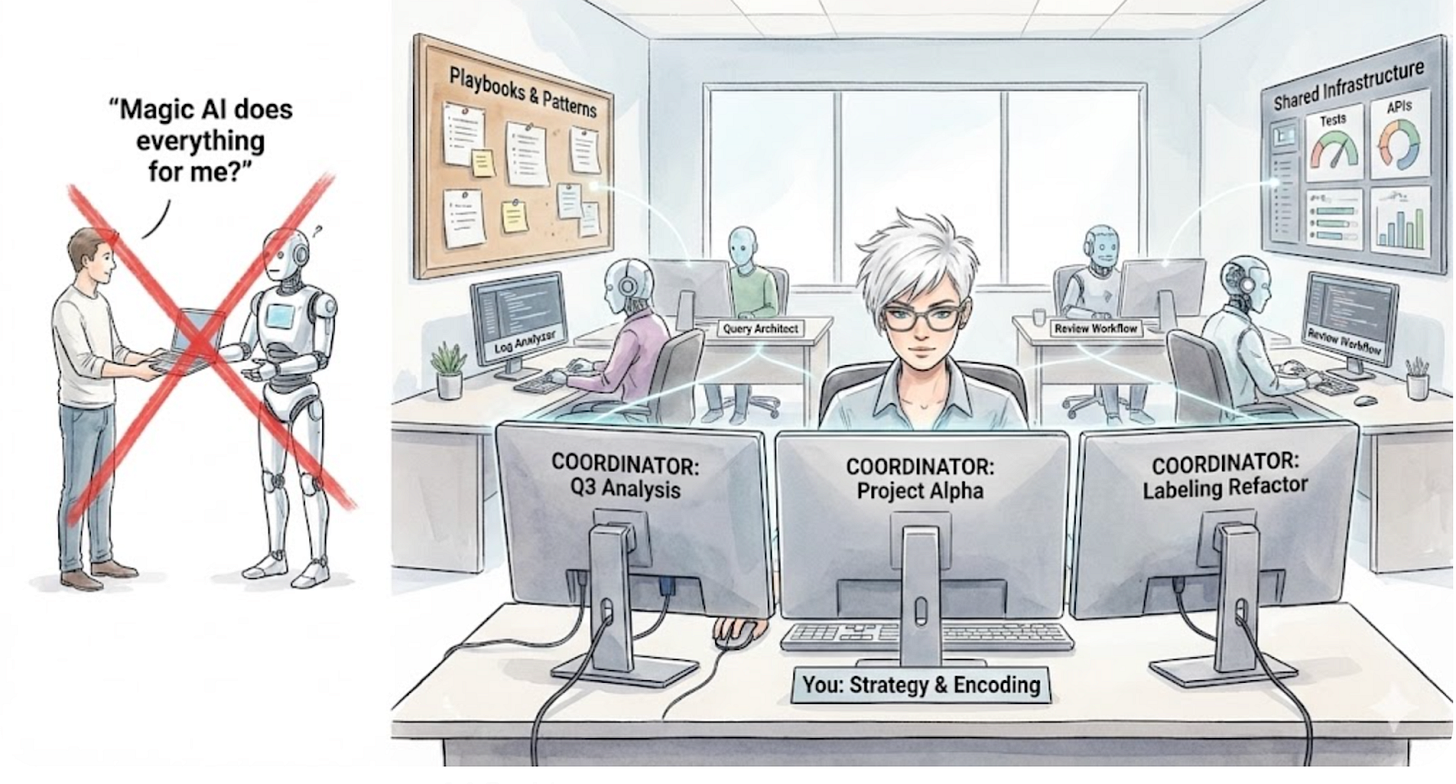

Your goal is to train your AI until it can operate at your task-level altitude - as a peer, not a junior you’re endlessly correcting. When you open a session to start a project, the agent you’re interacting with is your coordinator and collaborator. It should know your task, your principles, and your stack well enough to think at the level you do. Its precious context should be spent on the highest-level problem you’re solving, not constantly re-deriving basic functions.

It reaches that level of abstraction the same way you do: by leaning on an ecosystem. Tools, scripts, agents, and services become its infrastructure and “colleagues,” each with a clear job. Together they behave like a small AI org that extends what one human can realistically cover.

As you design that ecosystem, your scope actually expands rather than shrinks. You end up responsible for more systems, but at an operational level, not an implementation level. You don’t need every API and library call in your head; you do need a solid mental model of how the pieces fit together and when something smells off. The learning here is non-optional - most bad outcomes I see come from skipping that step - and the AI’s job is to carry the mechanical recall and boilerplate so you can focus on the reasoning and design.

That might sound like a daunting amount of training and architecture. It isn’t. The system emerges from a simple discipline: fix each issue once, properly, in a way the system can remember, and let those fixes compound over time. Kieran Klaassen calls this “compounding engineering” - building systems where every bug, review, and decision becomes a permanent lesson rather than a one-off fix. In practice, the main way I do that with AI is DRY for AI: treating repetition as a signal that something should be encoded into the AI’s ecosystem so it becomes shared, reusable behavior, not a constant re-teach.

Note: Claude Code is used as the running example as I’ve found its configurability fits well with my data science work, but the principles can be applied more broadly.

DRY for AI

It can feel like a victory - even vindication - when you hand-code something faster than your AI pair programmer.

But in a world of increasingly capable AI agents, DIY is technical debt: you’re keeping yourself in the loop on work the model could handle reliably if you gave it the right scaffolding.

Your time is better spent building that tooling than racing it line by line.

DRY - Don’t Repeat Yourself - is foundational to software engineering. Copy-paste code? Refactor into a function. Same logic twice? Abstract into a module. The same principle applies to AI work, just at a different abstraction layer: not just in code, but in how you run analyses, correct mistakes, and coordinate workflows.

Traditional DRY: Don’t copy-paste code → write a function.

AI DRY: Don’t repeat corrections, access patterns, or training → encode the principle once.

The classic DRY tradeoff - “will the time I save outweigh the time to automate?” - is radically skewed by AI. The time to build drops toward zero, while the impact multiplies as properly designed tools scale instantly across your and your teammates’ projects.

The diagnostic question: Where am I repeating myself, and what kind of repetition is it?

1. Repeated Corrections

Any prompt I’ve given more than twice goes in this category. Doubly if curse words were involved. This is usually the first, most obvious layer of failures and fixes.

“One paved path or clear failure, do NOT create fallbacks.”

“When you make a PR, use pull_request_template.md, be professional, no emojis.”

The most naive first step you can take is to encode these straight into a CLAUDE.md file. At the end of this I’ll talk through how I refactor many of these into areas that enforce better AI behavior. But the critical starting point is clearly identifying and articulating the incorrect underlying behavior, and what behavior you want to see instead.

2. Repeated Access (You as Human Clipboard)

Every time you copy/paste logs, fetch data Claude can’t reach, or shuttle information between systems, that’s infrastructure waiting to be built. Building that infrastructure might not have been “in your wheelhouse” before, but this is exactly the sort of thing AI coders excel at. Describe what you need, collaborate on the simplest implementation. In many cases it’s as simple as generating a script and adding a sentence like “use ./scripts/fetch_logs.sh to get the last hour of production logs” - first in conversation with Claude, then in your CLAUDE.md or other strategic places.

You are an extraordinarily slow bottleneck that prevents efficient iteration - investments here are well worth it.

3. Repeated Training (You as Tutor)

Sometimes the repetition isn’t a single command - it’s an entire pattern of work you keep hand-holding your AI through.

For example, I used to run 10–20 variations of API-based LLM labeling runs on my datasets to tune prompts and understand edge cases. I (embarrassingly) tracked the experiment variations manually in a Jupyter notebook comment block. It was messy, hard to reference, and duplicated across dozens of projects. At single-human speed and scale, that was tolerable. Once Claude was in the mix, it became obviously wrong: increasingly error-prone, unreliable, and constantly demanding attention from both of us.

Once I treated that whole process as DRY debt, the shape of the work changed. I started by asking Claude to build a small CLI wrapper - one command that handles validation, execution, and logging. It replaced my messy text file with a comprehensive, queryable table and baked in several other checks and steps. Over a few weeks of incremental capability development, what used to be “Wren’s janky logging notebook” became infrastructure that dozens of people now lean on. A heavy, error-prone process that demanded constant attention became code-automated, letting us both focus on higher-level experiment design.

That’s the real shift: AI didn’t just make me faster - it made it worth building the kind of software infrastructure that used to be “out of scope” for a single data scientist. It accelerated and deepened the analyses I could run and made it trivial to share that methodology with other data scientists. The process moved from “in my head and my notebook” to “in shared software + Claude Code infrastructure,” raising capabilities while reducing onboarding overhead.

Together, these three kinds of repetition - corrections, access, and training - tell you what to encode. The toolkit in the next section is about where that encoding should live.

The Toolkit: Where to Encode What

Imperfect Adherence

A strength of AI coding is that most of your “encoding” is language-based and flexible, not rigid code. The flip side is: you will never get 100% adherence.

Rules you put in CLAUDE.md will sometimes be ignored, no matter how many **CRITICAL**s you add. Commands and agents that worked once will fail in slightly different situations.

This makes your job more nuanced than “throw everything you need to know in a file.” You have to be strategic in your context engineering.

After a repeated miss, the first question shouldn’t be “What’s wrong with the model?” but “How did I set it up for failure?”

Were expectations clear? Did I encode this in the right layer, or bury it in a long doc where the instruction is low-salience noise in an already crowded context window?

To get better behavior, you have to be intentional about where things live so your primary coordinator gets the right information at the right time. That’s what the toolkit is for: deciding where each kind of encoding should live so it can actually work.

CLAUDE.md: Orientation Guide

CLAUDE.md is an always-loaded brief on what this repo is, how it behaves, and how you expect Claude to work with it. In practice, it should answer three questions:

What is this project?

Plain language about what the system does and what “good” looks like.

How do we work here?

The scientific mindset you expect, communication styles, whether to fail loudly instead of inventing fallbacks, whether to bias toward a quick internal prototype or production-quality changes - your work style and “culture”

Where is the deeper context?

Pointers to resources (docs, repos, folders, or other toolkit components like agents and commands) that will help across different tasks required to work in this system:

“See ARCHITECTURE.md before proposing structural changes.”

“See analysis-examples/ for templates of our most common analysis patterns.”

“Use the log-debugger agent to investigate job failures.”

That keeps CLAUDE.md lean while giving Claude a durable mental model: what this thing is, how to behave inside it, and where to go for depth - without you re-explaining the basics in every prompt.

Like everything in this toolkit, CLAUDE.md is a living document. Any time you catch yourself repeating the same explanation or correction, ask: “Should this be integrated into the CLAUDE.md instead?” With continuous refinement and refactoring, this becomes not only valuable for Claude, but also a helpful, durable human readable artifact.

Commands: Repeatable Workflows

Commands are your SOPs - standard operating procedures that anyone can run consistently. They live in your main context window, so they have full access to your current conversation and project state. They let you invoke structured workflows without those instructions cluttering Claude’s baseline behavior when you don’t need them.

Unlike static templates, commands are LLM-based instructions, which makes them much better at handling branching and ambiguous situations. They can ask clarifying questions, adapt to what they find, recover from unexpected states, trigger other commands and agents, and more. Examples:

/review - analyze current changes, run tests, check for obvious issues.

/analyze-experiment-output - pull recent evaluation runs, summarize results

Commands vs. Agents. Use a command when the workflow should:

See your full conversation and current work-in-progress, and

Run as a structured “mode” of your main collaborators

Use an agent when the work is a separable sub-problem that benefits from a fresh, focused context (deep log-diving, extensive docs research, large search spaces). Think of commands as orchestrated workflows in your main session; agents as specialists you spin up for isolated deep work.

Agents: Delegated Sub-Problems

Agents are specialized sub-contexts with their own system prompts. They do deep work in isolation and return condensed summaries.

Use an agent when:

The task is token-heavy. Digging through 500 lines of logs, exploring a new API’s documentation, analyzing a complex stack trace - this would bloat your main context and push out the conversation history you actually need.

The task is at a lower altitude. Your coordinator should stay at system-level, strategic thinking. Detailed debugging, exhaustive research, or brute-force exploration is a sub-problem you delegate.

You want parallel exploration. Multiple agents can run simultaneously on independent tasks, then you synthesize their findings.

Think of agents as specialists with a job description and a clear ask. For example:

log-analyzer - “Spin up a log-analyzer agent to find out which runs failed, review the logs and tell me the most likely root cause, plus your confidence.”

query-builder - “Use a query-builder agent to debug this query, test how memory efficient it is, and propose improvements.”

docs-researcher - “Create a researcher agent to read this API’s docs and summarize the key endpoints that I will need for our current task.”

They bring you summarized outputs, not a play-by-play of every step.

Anthropic calls this “separation of concerns”: each agent gets its own tools, prompts, and exploration trajectory. The detailed work stays isolated; your coordinator focuses on synthesis and decision-making. You can define reusable agents in your repo, or spin up one-off specialists via prompt (“have a subagent do [task]”).

Hooks: Process Encoded

Hooks are event-driven automation that fire when Claude takes specific actions. You define a trigger and what should happen when it fires.

Trigger: “Whenever someone runs gh pr create…”

Action: “…check if the /review command was run; if not, ask them to do it first.”

This isn’t just guardrails - it’s how you encode your team’s process into the system itself. Hooks let you:

Hard-block - prevent an action entirely.

Soft-guide - suggest the right path without blocking anything.

Automate - format, lint, notify, or checkpoint with no extra prompt.

The real power is in soft enforcement. Don’t block PRs that skip review - just make the right path obvious and low-friction. People (and AIs) follow the path of least resistance; hooks let you shape that path.

Use hooks when you find yourself saying:

“I wish no one would run X without doing Y first.”

“Every time we do this, we forget to also log / format / notify.”

“I want this check to happen every time, even if I forget to ask.”

Tools: Taught vs. Formal

Taught capabilities are things Claude can do because you built them together and told it how - but they’re not registered as first-class tools. Claude learns about them from CLAUDE.md, commands, or prior conversation.

“Use ./scripts/fetch_logs.sh to get the last hour of production logs.”

“Run ./scripts/eval_runner.py to kick off a configured evaluation with logging.”

For work that should be code, not conversation - deterministic, repeatable, the same way every time - you build the script with Claude, then teach Claude when to use it. The LLM decides when conversationally; the code handles how programmatically.

Formal tools are the ones Claude sees as first-class actions in its toolset: they’re registered, schema’d, permission-gated, and discoverable (you’ll see them in /permissions or in an agent’s tool list).

This includes both:

Built-in tools like Edit, Bash, Read, Write, Glob, Grep - always available in Claude Code.

Custom tools you register via the tools API or Model Context Protocol (MCP): anything from

a wrapper around a CLI or script, to

a connector to external systems (databases, internal services, APIs, search).

MCP is a powerful way to standardize and share tools across projects and agents, but it’s just one subset of formal tools, not the whole category.

A formal tool is essentially half language, half code:

In code, you define: “Given these inputs, do X and return Y.”

In language, you describe: “What this tool is for, and when to use it.”

Claude uses the natural language description to decide when the tool is relevant and how to fill the arguments, then runs your code to do the work. It’s conversational at the edges, programmatic in the middle.

The progression usually looks like:

Start as an informal taught capability → validate it works and that you use it a lot → graduate it to a formal tool when it needs to be robust, discoverable, and shared.

When you notice other people depending on it, or you need more safety, observability, or reuse across agents and projects, that’s your cue to formalize it.

For more detail on how Claude reasons about and uses tools, Anthropic’s Writing Tools for Agents is a great deep dive.

Write less code, do more good science.

This isn’t the end of engineering rigor; it’s the scaling of it.

You aren’t just writing code; you’re encoding reasoning, intent, and judgment. The bottleneck is no longer how many library calls you’ve memorized, but whether you can decompose and formalize your intent clearly enough for an ecosystem of agents to execute.

That’s always been the bedrock of good engineering and science. But this shift opens a critical door. Because the interface to this power is now written language, we can plug the rigorous thinking of other disciplines directly into the development loop. I’ve seen investigators probing edge cases, lawyers stress-testing risk, and Ops teams defining evaluations - not by filing tickets, but by engaging with the system itself.

We need this depth of collaboration to survive the pace of modern development. We are wiring together models and systems faster than traditional development can handle. If we don’t encode our best thinking into shared, intelligible workflows, we end up with fragile, ad-hoc analysis. But when we do, we can powerfully pool, share, and amplify diverse individual rigor into scalable systems.

The old flex was how much you could outperform the AI.

The new flex is how much the AI outperforms because you taught it to operate that way.

Wren is a Senior Staff Research Engineer at a Fortune 500 company, working on AI evaluation, tooling, and risk mitigation. She also runs workshops that have introduced hundreds of data scientists and engineers to AI coding assistants.

I like this framing, I haven’t seen it put quite this way, and I think it’s a helpful perspective. That said, we can’t fully absolve the model itself of responsibility.

We accept fallibility everywhere, but always relative to risk e.g. duct tape on a leaky faucet is ok but it isn’t tolerated in a spacecraft. AI may not be the spacecraft (yet), but its well above the “faucet repair” tier, which means there’s a limit to how much agents, workflows, and post-hoc constraints can compensate.

At some point, duct tape stops working, and reliability has to be addressed at the foundational architecture and training level, where the problem truly resides.